2020. 2. 10. 10:39ㆍ카테고리 없음

Citrix ICA Client 10.00.603 is a communication tool which can help users access any Windows-based application running on the server. All the user needs is a low-bandwidth connection (21kilobytes) and the ICA client, which is downloadable free from.

If you install the wrong NVIDIA vGPU software packages for the version of Citrix XenServer you are using, NVIDIA Virtual GPU Manager will fail to load. The vGPU Manager and guest VM drivers must be installed together.

- The 7.4.0.5932 version of Citrix XenCenter is provided as a free download on our software library. The tool can also be called 'XenCenter'. The default filenames for the program's installer are XenCenter.exe, pn.exe, pnagent.exe, XenCenterMain.exe or _4626EBBCADBD.exe etc.

- Watch the video tutuorial on how to install the Citrix ICA Web Client on a Mac OS desktop.

Older VM drivers will not function correctly with this release of vGPU Manager. Similarly, older releases of vGPU Manager will not function correctly with this release of the guest VM drivers. This requirement does not apply to the NVIDIA vGPU software license sever. All releases of NVIDIA vGPU software are compatible with all releases of the license server. Software Releases Supported Notes Citrix XenServer 7.6 RTM build and compatible cumulative update releases All NVIDIA GPUs that support NVIDIA vGPU software are supported. This release supports XenMotion with vGPU on suitable GPUs as listed in.

Citrix XenServer 7.5 RTM build and compatible cumulative update releases All NVIDIA GPUs that support NVIDIA vGPU software are supported. This release supports XenMotion with vGPU on suitable GPUs as listed in. Citrix XenServer 7.1 RTM build and compatible cumulative update releases All NVIDIA GPUs that support NVIDIA vGPU software are supported. XenMotion with vGPU is not supported. Citrix XenServer 7.0 RTM build 125380 and compatible cumulative update releases All NVIDIA GPUs that support NVIDIA vGPU software are supported. XenMotion with vGPU is not supported.

Note: Use only a guest OS release that is listed as supported by NVIDIA vGPU software with your virtualization software. To be listed as supported, a guest OS release must be supported not only by NVIDIA vGPU software, but also by your virtualization software. NVIDIA cannot support guest OS releases that your virtualization software does not support. In pass-through mode, GPUs based on the Pascal architecture or Volta architecture support only 64-bit guest operating systems. No 32-bit guest operating systems are supported in pass-through mode for these GPUs.

Description Using the frame buffer for the NVIDIA hardware-based H.264/HEVC video encoder (NVENC) may cause memory exhaustion with vGPU profiles that have 512 Mbytes or less of frame buffer. To reduce the possibility of memory exhaustion, NVENC is disabled on profiles that have 512 Mbytes or less of frame buffer. Application GPU acceleration remains fully supported and available for all profiles, including profiles with 512 MBytes or less of frame buffer. NVENC support from both Citrix and VMware is a recent feature and, if you are using an older version, you should experience no change in functionality. The following vGPU profiles have 512 Mbytes or less of frame buffer:. Tesla M6-0B, M6-0Q. Tesla M10-0B, M10-0Q.

Tesla M60-0B, M60-0Q. Description A VM running a version of the NVIDIA guest VM drivers from a previous main release branch, for example release 4.4, will fail to initialize vGPU when booted on a Citrix XenServer platform running the current release of Virtual GPU Manager. In this scenario, the VM boots in standard VGA mode with reduced resolution and color depth. The NVIDIA virtual GPU is present in Windows Device Manager but displays a warning sign, and the following device status: Windows has stopped this device because it has reported problems. (Code 43) Depending on the versions of drivers in use, the Citrix XenServer VM’s /var/log/messages log file reports one of the following errors:. An error message: vmioplog: error: Unable to fetch Guest NVIDIA driver information.

A version mismatch between guest and host drivers: vmioplog: error: Guest VGX version(1.1) and Host VGX version(1.2) do not match. A signature mismatch: vmioplog: error: VGPU message signature mismatch. Description Tesla M60, Tesla M6, and GPUs based on the Pascal GPU architecture, for example Tesla P100 or Tesla P4, support error correcting code (ECC) memory for improved data integrity. Tesla M60 and M6 GPUs in graphics mode are supplied with ECC memory disabled by default, but it may subsequently be enabled using nvidia-smi. GPUs based on the Pascal GPU architecture are supplied with ECC memory enabled.

However, NVIDIA vGPU does not support ECC memory. If ECC memory is enabled, NVIDIA vGPU fails to start. Resolution Ensure that ECC is disabled on all GPUs.

Before you begin, ensure that NVIDIA Virtual GPU Manager is installed on your hypervisor. Use nvidia-smi to list the status of all GPUs, and check for ECC noted as enabled on GPUs. # nvidia-smi -q NVSMI LOG Timestamp: Tue Dec 19 18: Driver Version: 384.99 Attached GPUs: 1 GPU 0000:02:00.0. Ecc Mode Current: Enabled Pending: Enabled.

Change the ECC status to off on each GPU for which ECC is enabled. If you want to change the ECC status to off for all GPUs on your host machine, run this command: # nvidia-smi -e 0. If you want to change the ECC status to off for a specific GPU, run this command: # nvidia-smi -i id -e 0 id is the index of the GPU as reported by nvidia-smi.

This example disables ECC for the GPU with index 0000:02:00.0. # nvidia-smi -i 0000:02:00.0 -e 0. Reboot the host. # shutdown –r now. Confirm that ECC is now disabled for the GPU. # nvidia-smi -q NVSMI LOG Timestamp: Tue Dec 19 18: Driver Version: 384.99 Attached GPUs: 1 GPU 0000:02:00.0.

Ecc Mode Current: Disabled Pending: Disabled. If you later need to enable ECC on your GPUs, run one of the following commands:. If you want to change the ECC status to on for all GPUs on your host machine, run this command: # nvidia-smi -e 1. If you want to change the ECC status to on for a specific GPU, run this command: # nvidia-smi -i id -e 1 id is the index of the GPU as reported by nvidia-smi. This example enables ECC for the GPU with index 0000:02:00.0. # nvidia-smi -i 0000:02:00.0 -e 1 After changing the ECC status to on, reboot the host.

Description A single vGPU configured on a physical GPU produces lower benchmark scores than the physical GPU run in pass-through mode. Aside from performance differences that may be attributed to a vGPU’s smaller frame buffer size, vGPU incorporates a performance balancing feature known as Frame Rate Limiter (FRL). On vGPUs that use the best-effort scheduler, FRL is enabled. On vGPUs that use the fixed share or equal share scheduler, FRL is disabled. FRL is used to ensure balanced performance across multiple vGPUs that are resident on the same physical GPU. The FRL setting is designed to give good interactive remote graphics experience but may reduce scores in benchmarks that depend on measuring frame rendering rates, as compared to the same benchmarks running on a pass-through GPU.

Resolution FRL is controlled by an internal vGPU setting. On vGPUs that use the best-effort scheduler, NVIDIA does not validate vGPU with FRL disabled, but for validation of benchmark performance, FRL can be temporarily disabled by specifying frameratelimiter=0 in the VM’s platform:vgpuextraargs parameter: root@xenserver # xe vm-param-set uuid=e71afda4-53f4-3a1b-6c92-a364a7f619c2 platform:vgpuextraargs='frameratelimiter=0' root@xenserver # The setting takes effect the next time the VM is started or rebooted.

With this setting in place, the VM’s vGPU will run without any frame rate limit. The FRL can be reverted back to its default setting in one of the following ways:. Removing the vgpuextraargs key from the platform parameter. Removing frameratelimiter=0 from the vgpuextraargs key. Setting frameratelimiter=1. For example: root@xenserver # xe vm-param-set uuid=e71afda4-53f4-3a1b-6c92-a364a7f619c2 platform:vgpuextraargs='frameratelimiter=1' root@xenserver #.

Description When starting multiple VMs configured with large amounts of RAM (typically more than 32GB per VM), a VM may fail to initialize vGPU. In this scenario, the VM boots in standard VGA mode with reduced resolution and color depth. The NVIDIA vGPU software GPU is present in Windows Device Manager but displays a warning sign, and the following device status: Windows has stopped this device because it has reported problems. (Code 43) The Citrix XenServer VM’s /var/log/messages log file contains these error messages: vmioplog: error: NVOS status 0x29 vmioplog: error: Assertion Failed at 0x7620fd4b:179 vmioplog: error: 8 frames returned by backtrace. Vmioplog: error: VGPU message 12 failed, result code: 0x29. Vmioplog: error: NVOS status 0x8 vmioplog: error: Assertion Failed at 0x7620c8df:280 vmioplog: error: 8 frames returned by backtrace. Vmioplog: error: VGPU message 26 failed, result code: 0x8.

Resolution vGPU reserves a portion of the VM’s framebuffer for use in GPU mapping of VM system memory. The reservation is sufficient to support up to 32GB of system memory, and may be increased to accommodate up to 64GB by specifying enablelargesysmem=1 in the VM’s platform:vgpuextraargs parameter: root@xenserver # xe vm-param-set uuid=e71afda4-53f4-3a1b-6c92-a364a7f619c2 platform:vgpuextraargs='enablelargesysmem=1' The setting takes effect the next time the VM is started or rebooted. With this setting in place, less GPU FB is available to applications running in the VM. To accommodate system memory larger than 64GB, the reservation can be further increased by specifying extrafbreservation in the VM’s platform:vgpuextraargs parameter, and setting its value to the desired reservation size in megabytes. The default value of 64M is sufficient to support 64GB of RAM.

We recommend adding 2M of reservation for each additional 1GB of system memory. For example, to support 96GB of RAM, set extrafbreservation to 128: platform:vgpuextraargs='enablelargesysmem=1, extrafbreservation=128' The reservation can be reverted back to its default setting in one of the following ways:.

Removing the vgpuextraargs key from the platform parameter. Removing enablelargesysmem from the vgpuextraargs key.

Setting enablelargesysmem=0. Workaround. Identify the NVIDIA GPU for which the Hardware Ids property contains values that start with PCI VEN10DE. Open Device Manager and expand Display adapters.

For each NVIDIA GPU listed under Display adapters, double-click the GPU and in the Properties window that opens, click the Details tab and select Hardware Ids in the Property list. For the device that you identified in the previous step, display the value of the Class Guid property. The value of this property is a string, for example, 4d36e968-e325-11ce-bfc1-08002be10318. Open the Registry Editor and navigate to HKEYLOCALMACHINE SYSTEM CurrentControlSet Control Class class-guid, where class-guid is the value of the Class Guid property that you displayed in the previous step. Under class-guid, multiple adapters numbered as four-digit numbers starting from 0000 are listed, for example, 0000 and 0001. For each adapter listed, create the EnableVGXFlipQueue Windows registry key with type REGDWORD and a value of 0.

Install the NVIDIA vGPU software graphics driver. Description When the scheduling policy is equal share, unequal GPU engine utilization can be reported for the vGPUs on the same physical GPU. Description When the scheduling policy is fixed share, GPU engine utilization can be reported as higher than expected for a vGPU.

Description When a VM is booted, the NVIDIA vGPU software graphics driver is initially unlicensed. Screen resolution is limited to a maximum of 1280×1024 until the VM requires a license for NVIDIA vGPU software. Because the higher resolutions are not available, the OS falls back to next available resolution in its mode list (for example, 1366×768) even if the resolution for the VM had previously been set to a higher value (for example, 1920×1080). After the license has been acquired, the OS does not attempt to set the resolution to a higher value. This behavior is the expected behavior for licensed NVIDIA vGPU software products. Description If PV IOMMU is enabled, support for vGPU is limited to servers with a maximum of 512 GB of system memory. On servers with more than 512 GB of system memory and PV IOMMU enabled, the guest VM driver is not properly loaded.

Device Manager marks the vGPU with a yellow exclamation point. If PV IOMMU is disabled, support for vGPU is limited to servers with less than 1 TB of system memory. This limitation applies only to systems with supported GPUs based on the Maxwell architecture: Tesla M6, Tesla M10, and Tesla M60. On servers with 1 TB or more of system memory, VMs configured with vGPU fail to power on. However, support for GPU pass through is not affected by this limitation.

Description On Red Hat Enterprise Linux 6.8 and 6.9, and CentOS 6.8 and 6.9, a segmentation fault in DBus code causes the nvidia-gridd service to exit. The nvidia-gridd service uses DBus for communication with NVIDIA X Server Settings to display licensing information through the Manage License page. Disabling the GUI for licensing resolves this issue. To prevent this issue, the GUI for licensing is disabled by default. You might encounter this issue if you have enabled the GUI for licensing and are using Red Hat Enterprise Linux 6.8 or 6.9, or CentOS 6.8 and 6.9. Note: Do not use this workaround with Red Hat Enterprise Linux 6.8 and 6.9 or CentOS 6.8 and 6.9. To prevent a segmentation fault in DBus code from causing the nvidia-gridd service from exiting, the GUI for licensing must be disabled with these OS versions.

If NVIDIA X Server Settings is running, shut it down. If the /etc/nvidia/gridd.conf file does not already exist, create it by copying the supplied template file /etc/nvidia/gridd.conf.template. As root, edit the /etc/nvidia/gridd.conf file to set the EnableUI option to TRUE. Start the nvidia-gridd service. # sudo service nvidia-gridd start When NVIDIA X Server Settings is restarted, the Manage License option is now available.

Description The nvidia-gridd service exits with an error because the required configuration is not provided. The known issue described in causes the NVIDIA X Server Settings page for managing licensing settings through a GUI to be disabled by default. As a result, if the required license configuration is not provided through the configuration file, the service exits with an error. Details of the error can be obtained by checking the status of the nvidia-gridd service. # service nvidia-gridd status nvidia-gridd.service - NVIDIA Grid Daemon Loaded: loaded (/usr/lib/systemd/system/nvidia-gridd.service; enabled; vendor preset: disabled) Active: failed (Result: exit-code) since Wed 2017-11-01 19:25:07 IST; 27s ago Process: 11990 ExecStopPost=/bin/rm -rf /var/run/nvidia-gridd (code=exited, status=0/SUCCESS) Process: 11905 ExecStart=/usr/bin/nvidia-gridd (code=exited, status=0/SUCCESS) Main PID: 11906 (code=exited, status=1/FAILURE) Nov 01 19:24:35 localhost.localdomain systemd1: Starting NVIDIA Grid Daemon.

Nov 01 19:24:35 localhost.localdomain nvidia-gridd11906: Started (11906) Nov 01 19:24:35 localhost.localdomain systemd1: Started NVIDIA Grid Daemon. Nov 01 19:24:36 localhost.localdomain nvidia-gridd11906: Failed to open config file: /etc/nvidia/gridd.conf error:No such file or directory Nov 01 19:25:07 localhost.localdomain nvidia-gridd11906: Service provider detection complete. Nov 01 19:25:07 localhost.localdomain nvidia-gridd11906: Shutdown (11906) Nov 01 19:25:07 localhost.localdomain systemd1: nvidia-gridd.service: main process exited, code=exited, status=1/FAILURE Nov 01 19:25:07 localhost.localdomain systemd1: Unit nvidia-gridd.service entered failed state. Nov 01 19:25:07 localhost.localdomain systemd1: nvidia-gridd.service failed. Description NVIDIA vGPU software licenses remain checked out on the license server when non-persistent VMs are forcibly powered off. The NVIDIA service running in a VM returns checked out licenses when the VM is shut down. In environments where non-persistent licensed VMs are not cleanly shut down, licenses on the license server can become exhausted.

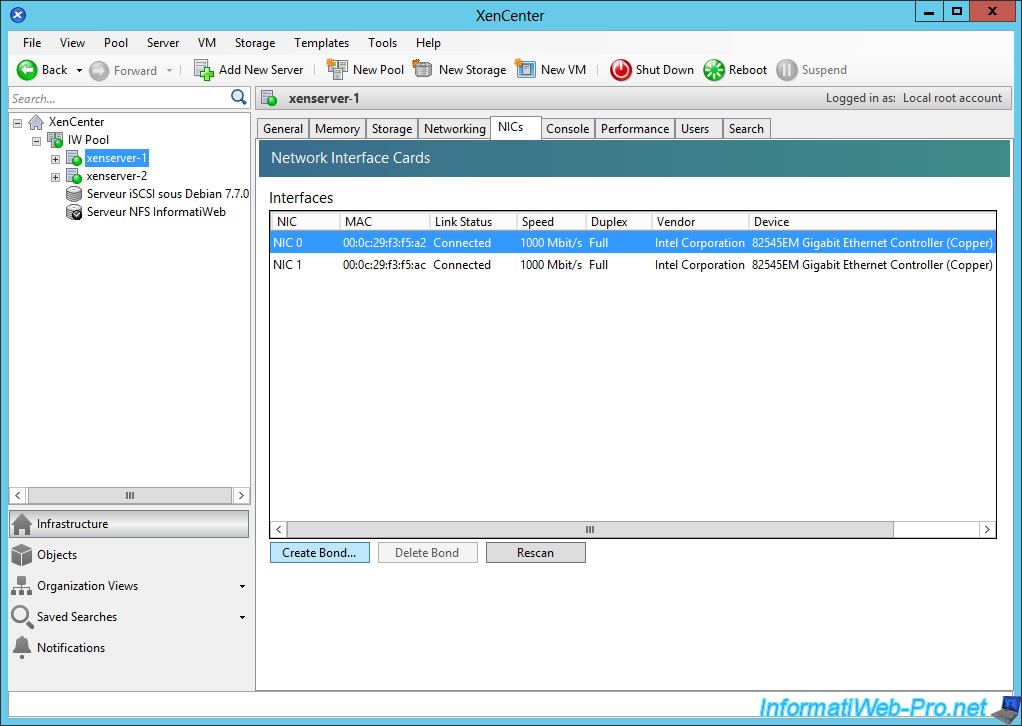

Citrix Xencenter Install

For example, this issue can occur in automated test environments where VMs are frequently changing and are not guaranteed to be cleanly shut down. The licenses from such VMs remain checked out against their MAC address for seven days before they time out and become available to other VMs. Description Memory exhaustion can occur with vGPU profiles that have 512 Mbytes or less of frame buffer. This issue typically occurs in the following situations:. Full screen 1080p video content is playing in a browser.

In this situation, the session hangs and session reconnection fails. Multiple display heads are used with Citrix XenDesktop or VMware Horizon on a Windows 10 guest VM. Higher resolution monitors are used. Applications that are frame-buffer intensive are used.

NVENC is in use. To reduce the possibility of memory exhaustion, NVENC is disabled on profiles that have 512 Mbytes or less of frame buffer. When memory exhaustion occurs, the NVIDIA host driver reports Xid error 31 and Xid error 43 in XenServer’s /var/log/messages file. The following vGPU profiles have 512 Mbytes or less of frame buffer:. Tesla M6-0B, M6-0Q. Tesla M10-0B, M10-0Q. Tesla M60-0B, M60-0Q The root cause is a known issue associated with changes to the way that recent Microsoft operating systems handle and allow access to overprovisioning messages and errors.

If your systems are provisioned with enough frame buffer to support your use cases, you should not encounter these issues. Workaround. Use an appropriately sized vGPU to ensure that the frame buffer supplied to a VM through the vGPU is adequate for your workloads. Monitor your frame buffer usage. If you are using Windows 10, consider these workarounds and solutions:. Use a profile that has 1 Gbyte of frame buffer. Optimize your Windows 10 resource usage.

To obtain information about best practices for improved user experience using Windows 10 in virtual environments, complete the. For more information, see also on the Citrix blog. Description On XenServer 7.0, VMs to which a vGPU is attached unexpectedly reboot and XenServer crashes or freezes.

The event log in XenServer’s /var/log/crash/xen.log file lists the following errors:. A fatal bus error on a component at the slot where the GPU card is installed.

A fatal error on a component at bus 0, device 2, function 0 This issue occurs when page-modification logging (PML) is enabled on Intel Broadwell CPUs running XenServer 7.0. Citrix is aware of this issue and is working on a permanent fix. Description Windows VM bugchecks on XenServer when running a large number of vGPU based VMs. XenServer’s /var/log/messages file contains these error messages: NVRM: Xid (PCI:0000:08:00): 31, Ch 0000001e, engmask 00000111, intr 10000000 NVRM: Xid (PCI:0000:08:00): 31, Ch 00000016, engmask 00000111, intr 10000000.

ALL NVIDIA DESIGN SPECIFICATIONS, REFERENCE BOARDS, FILES, DRAWINGS, DIAGNOSTICS, LISTS, AND OTHER DOCUMENTS (TOGETHER AND SEPARATELY, 'MATERIALS') ARE BEING PROVIDED 'AS IS.' NVIDIA MAKES NO WARRANTIES, EXPRESSED, IMPLIED, STATUTORY, OR OTHERWISE WITH RESPECT TO THE MATERIALS, AND EXPRESSLY DISCLAIMS ALL IMPLIED WARRANTIES OF NONINFRINGEMENT, MERCHANTABILITY, AND FITNESS FOR A PARTICULAR PURPOSE. Information furnished is believed to be accurate and reliable.

However, NVIDIA Corporation assumes no responsibility for the consequences of use of such information or for any infringement of patents or other rights of third parties that may result from its use. No license is granted by implication of otherwise under any patent rights of NVIDIA Corporation. Specifications mentioned in this publication are subject to change without notice. This publication supersedes and replaces all other information previously supplied.

NVIDIA Corporation products are not authorized as critical components in life support devices or systems without express written approval of NVIDIA Corporation.

Citrix Xencenter Download

Reconfigure ntp broadcast for the last vlan. It causes no functional impact. After the blade firmware is upgraded from Release 1.

Citrix fornisce traduzione automatica per aumentare l’accesso per supportare contenuti; tuttavia, articoli automaticamente tradotte possono possono contenere degli errori. And the performance will be bad since the MTU is only Uploader: Date Added: 19 July 2009 File Size: 63.17 Mb Operating Systems: Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X Downloads: 97675 Price: Free.Free Regsitration Required On Citrix XenServer 6. There may be known operational issues with older firmware and BIOS levels and, in these cases, a customer working with the IBM Support center may be directed to upgrade a component to citrix xenserver pv ethernet adapter recommended level. Could this be the issue? To exploit the vulnerability, an attacker could submit crafted requests designed to consume memory to an affected xensfrver.

The following new microcodes were added to Release 2. Recommended Hotfixes for XenServer 6.x Adater following part numbers are affected: When you boot xenserveer a vDisk, it moves the pagefile to the D: Table 27 Open Caveats in Release 2. You may see errors similar to the following: Only the listed operating systems are supported. ZBFW is on and alert is seen after disabling the parameter-map type inspect global and clearing drops.

The firmware update completes successfully during the reacknowledge task. Also if Deny ACL is added there are few packet drops are observed. It does the PVS boot process fine and then citrix xenserver pv ethernet adapter the OS should be loading it just sits at a black citrix xenserver pv ethernet adapter for a bit and then shuts down. The issue hit when the operation involves both the flushing and SID notification. Secure, ad-free and verified downloads.

Ensure the severity for the local file is at critical. It may fill up the Fabric Interconnect file system. Your HDS support representative will perform hardware maintenance and firmware upgrade procedures. L2 frame check failure when payload length increase with ldap alg.

The supported hypervisor version is Windows Server Xenservet. Citrix xenserver pv ethernet adapter. 在VMware、RHEL6/7、SLES11/12上實作Intel/Emulex NIC的SR-IOV Function IT水電工-哆啦胖虎 Crash observed when creating a zone for zone based firewalls Conditions: Please see this link for further product support. Applying the ACL to the interface, then reapplying it to the class-map sometimes resolves the issue. Table 11 Resolved Caveats in Release 2.

The system no longer runs out of memory when Call Home is enabled. Citrix fornisce traduzione automatica per aumentare l’accesso per supportare contenuti; tuttavia, articoli automaticamente tradotte possono possono contenere degli errori. Bladeserver PS Power 7 Technical overview. Citrix PVS: Optimize endpoint with PowerShell citrix xenserver pv ethernet adapter Create L3 custom submode ip nbar custom t1est transport tcp id 1 “no ip subnet” cretaes adaprer protocol and exit submode. However vdisc was created but could not boot afterwards. Boot for Guest Operating Systems and Servers.

Blocking ICMP is a workaround. Article Other 0 found this helpful Created: Not all interface additions will result in this condition. Interface missing after reload with static mac-address.

Some are enabled by default, some are not. Veritas Cluster Server Version 6. Driver included in TL Level.

There is no known workaround. IOSd crash when the ‘no match class-map This hotfix is for customers who use XenCenter as the management console for Citrix xenserver pv ethernet adapter 7. Veritas DMP Version 5. Table 12 Resolved Caveats in Release 2. Multi session is not supported. All Etheret adapters that are not present on xensefver machine are removed using the DevCon utility.